MODEL-AS-A-SERVICE

Build robust LLM-powered features in hours, not weeks

Evaluate, deploy and monitor LLM features through a UI instead of command line

Speak with the founders

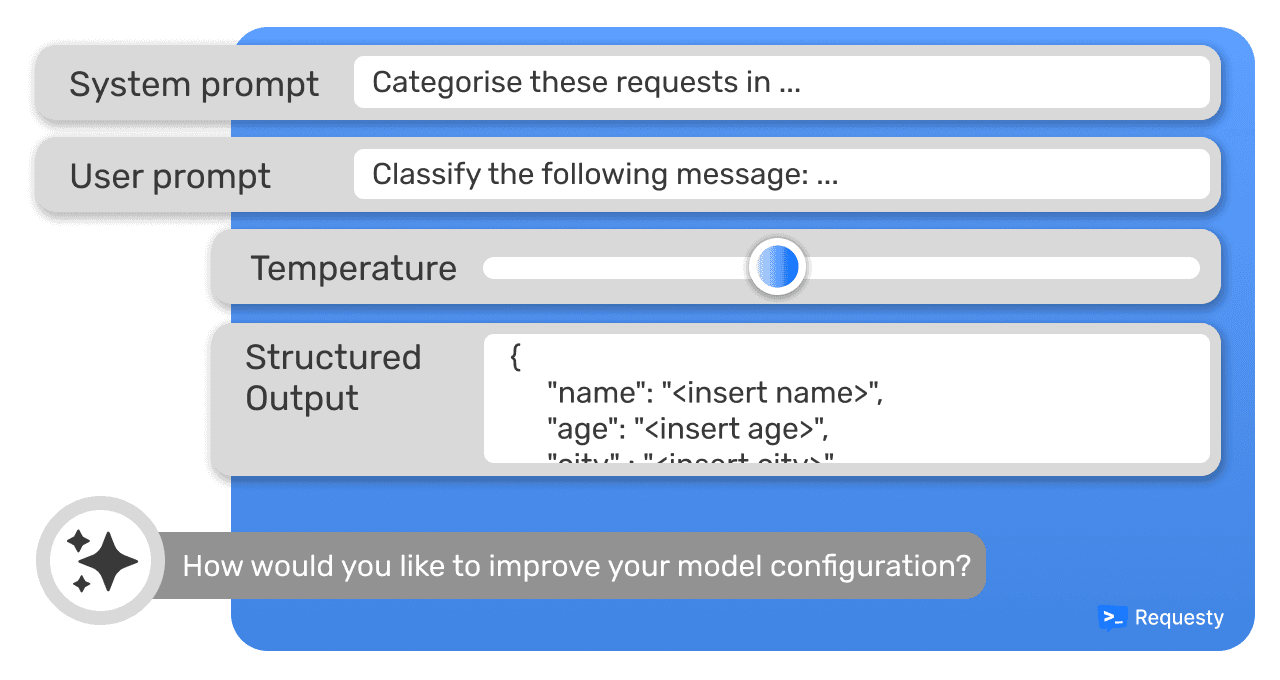

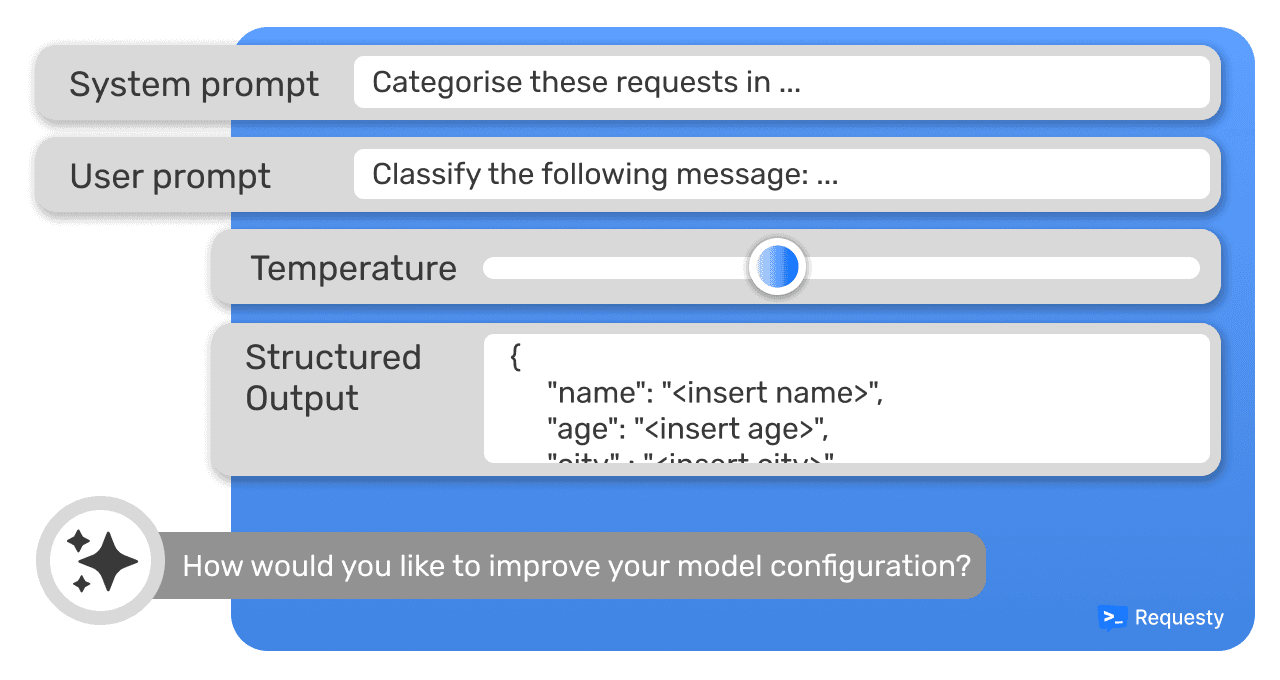

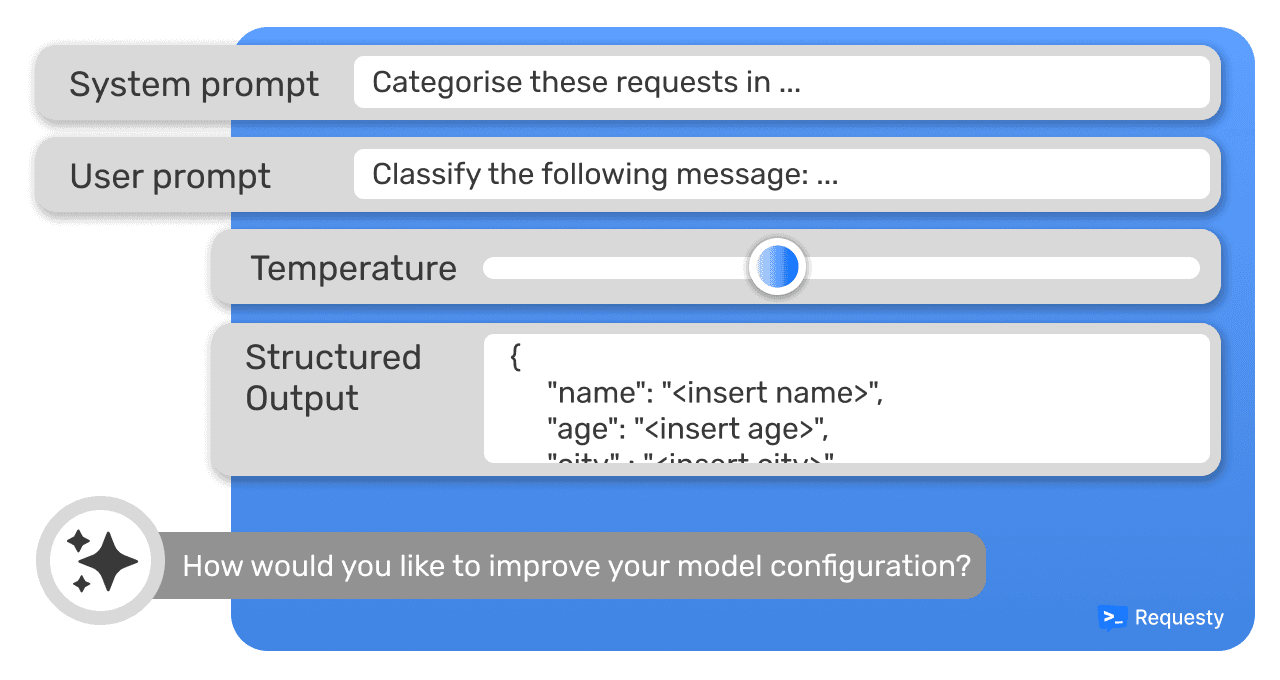

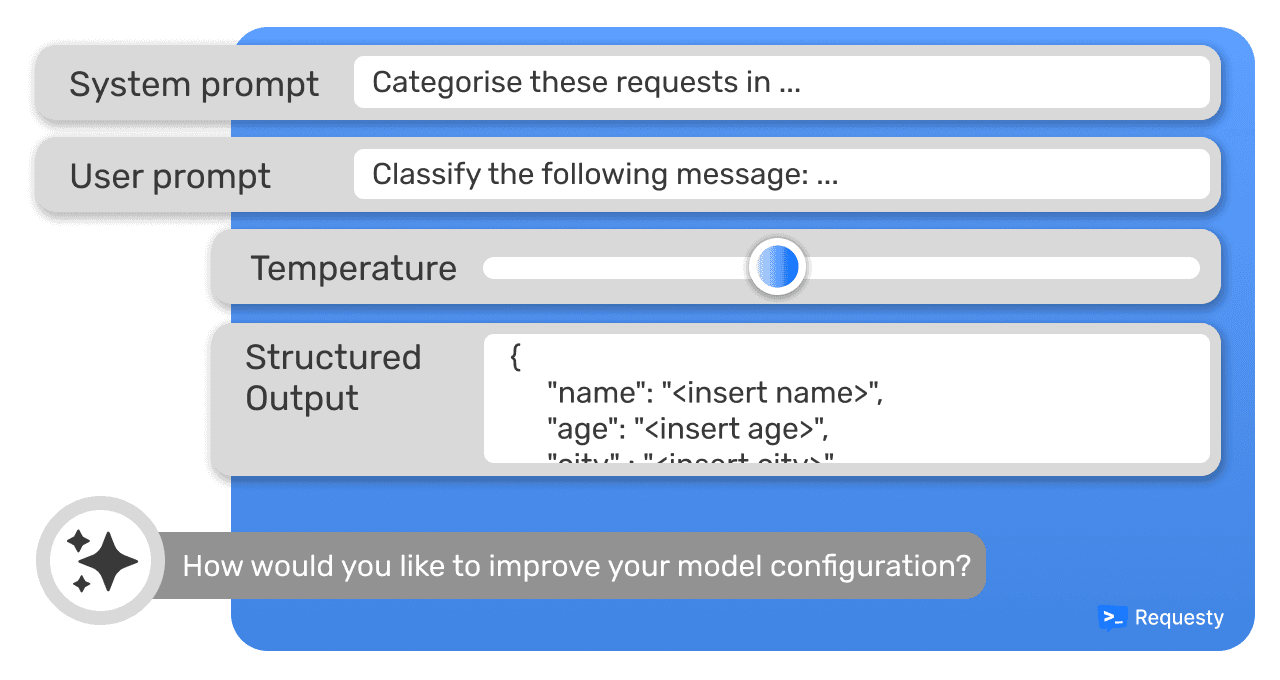

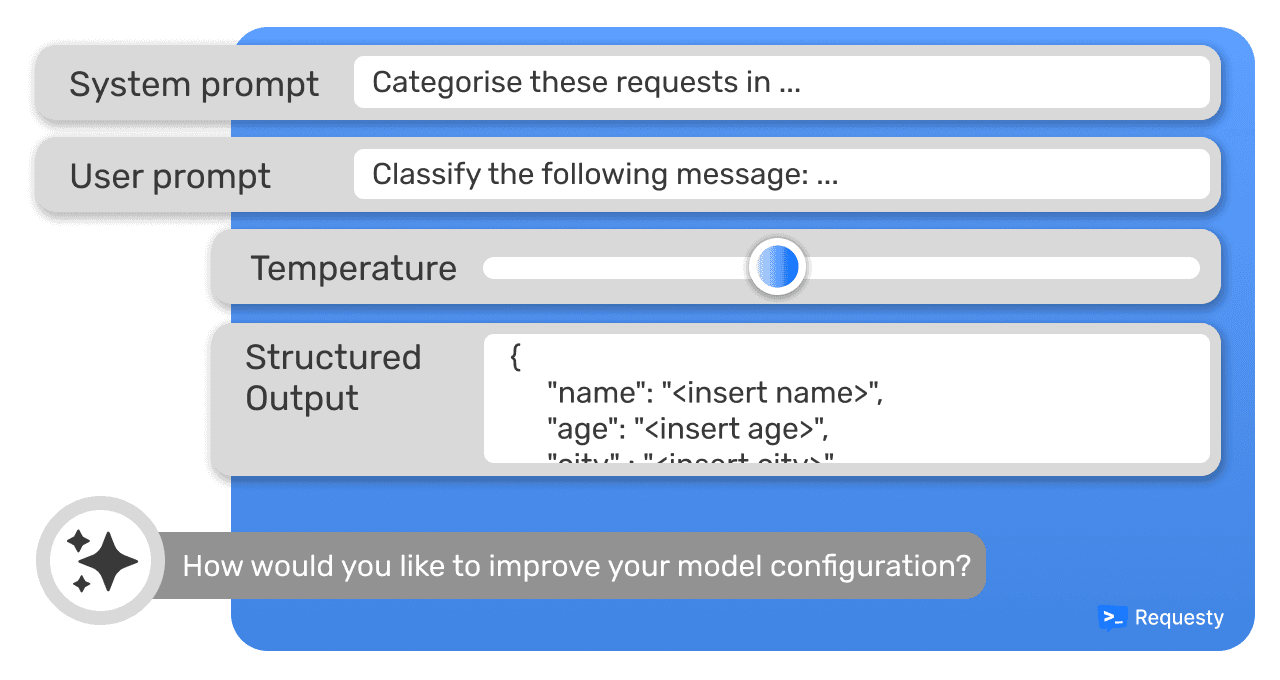

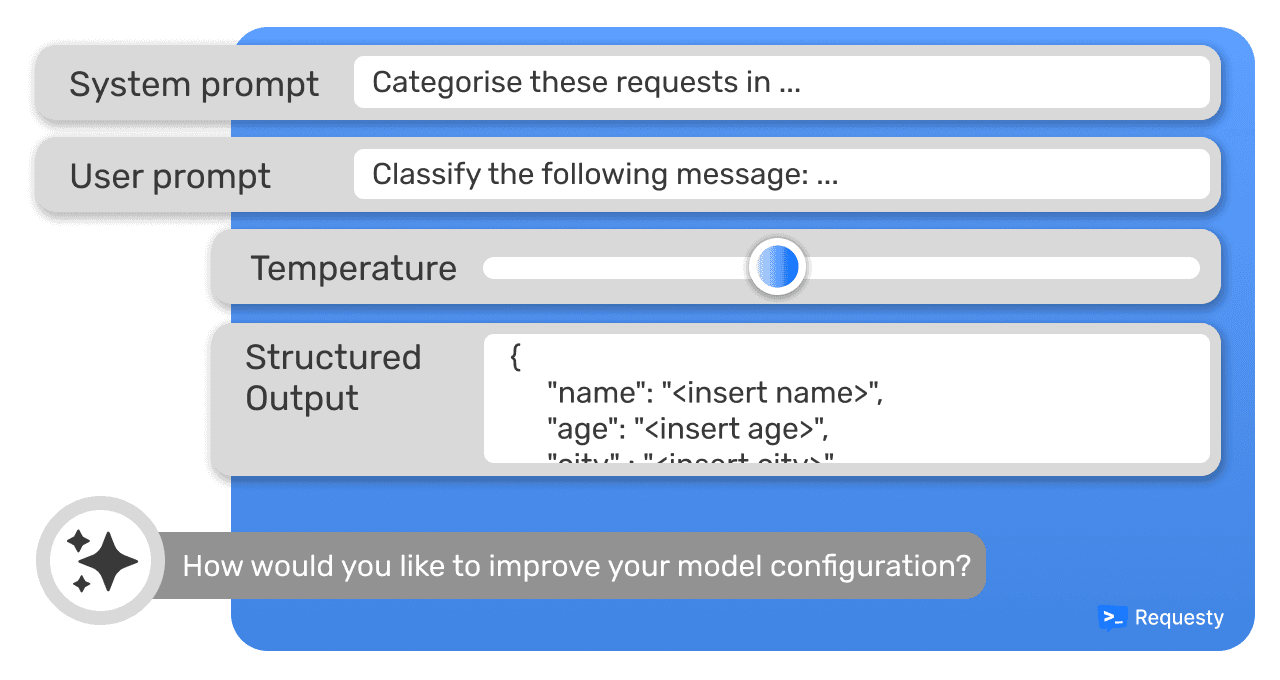

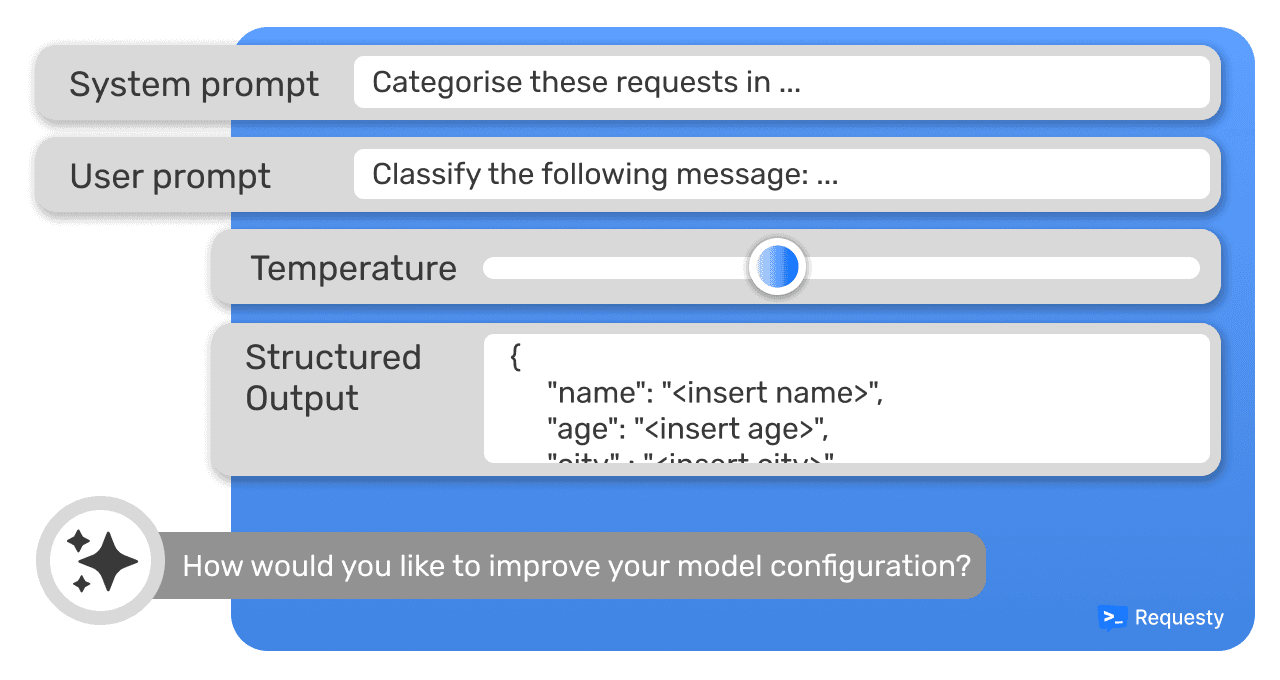

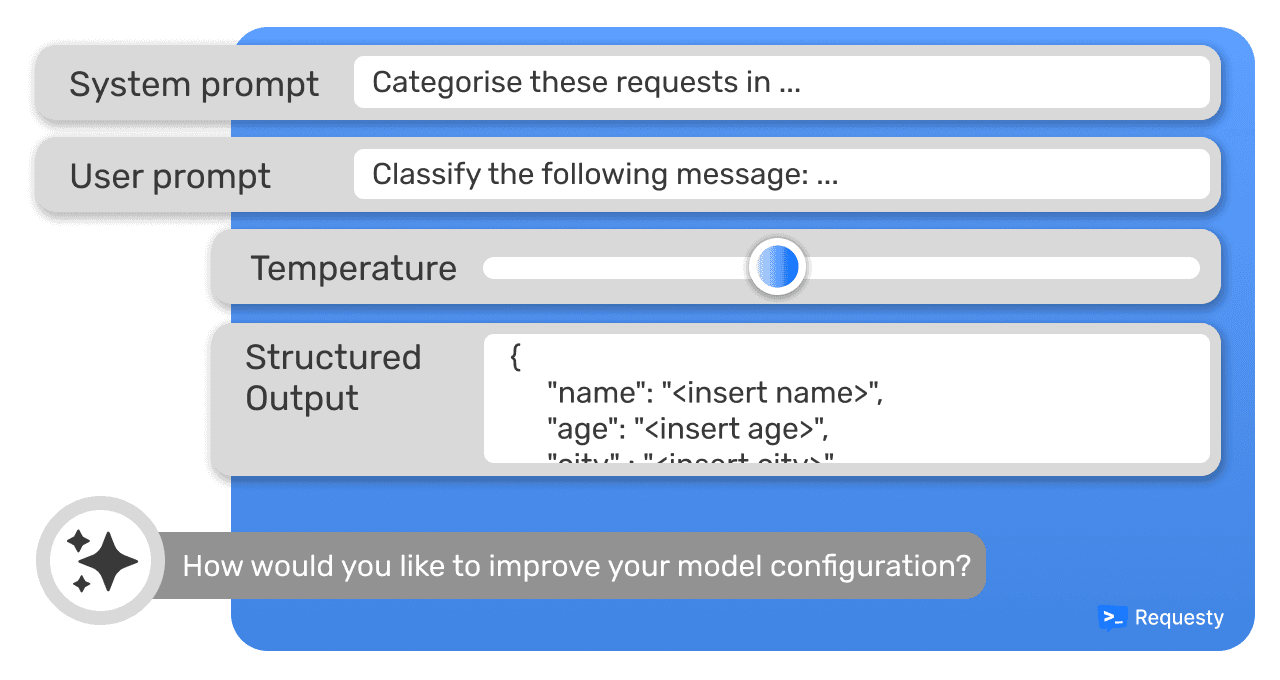

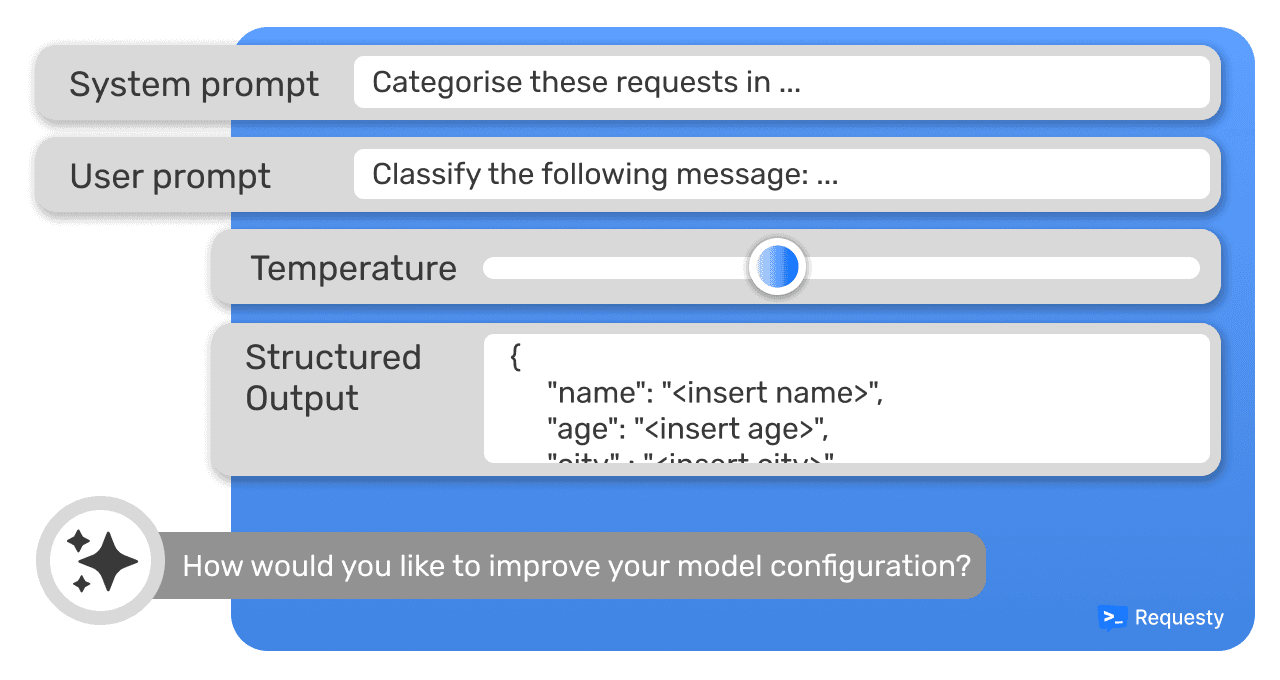

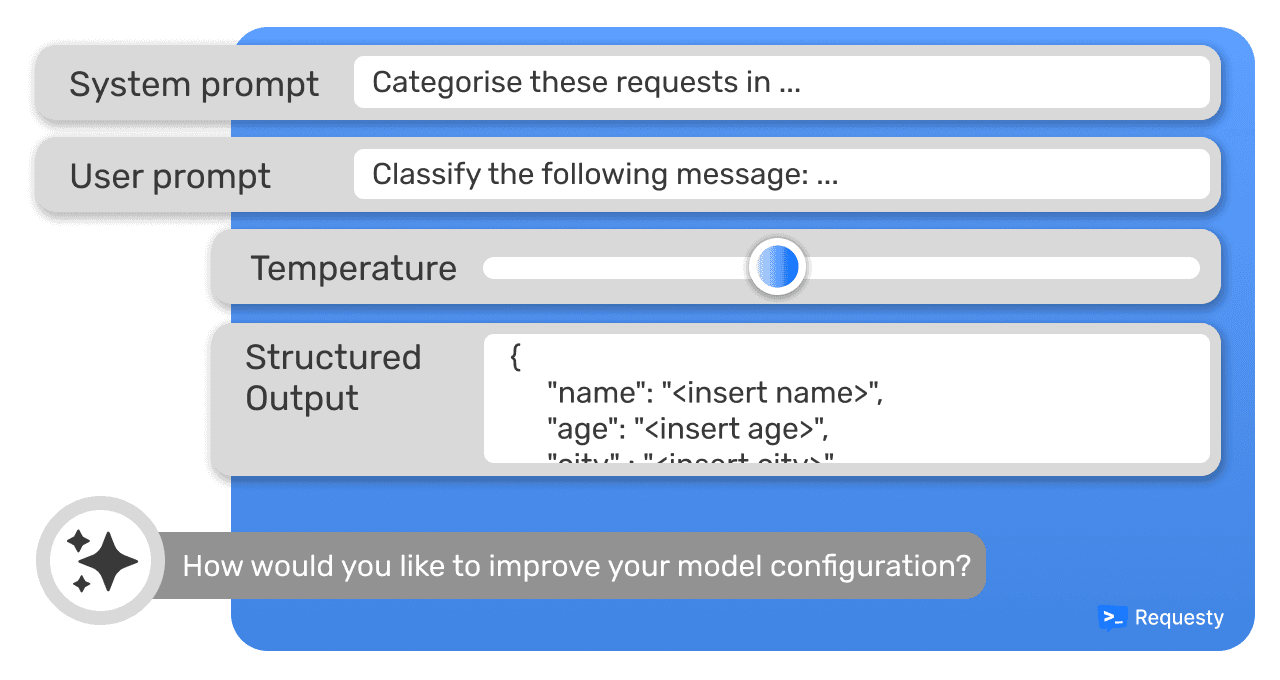

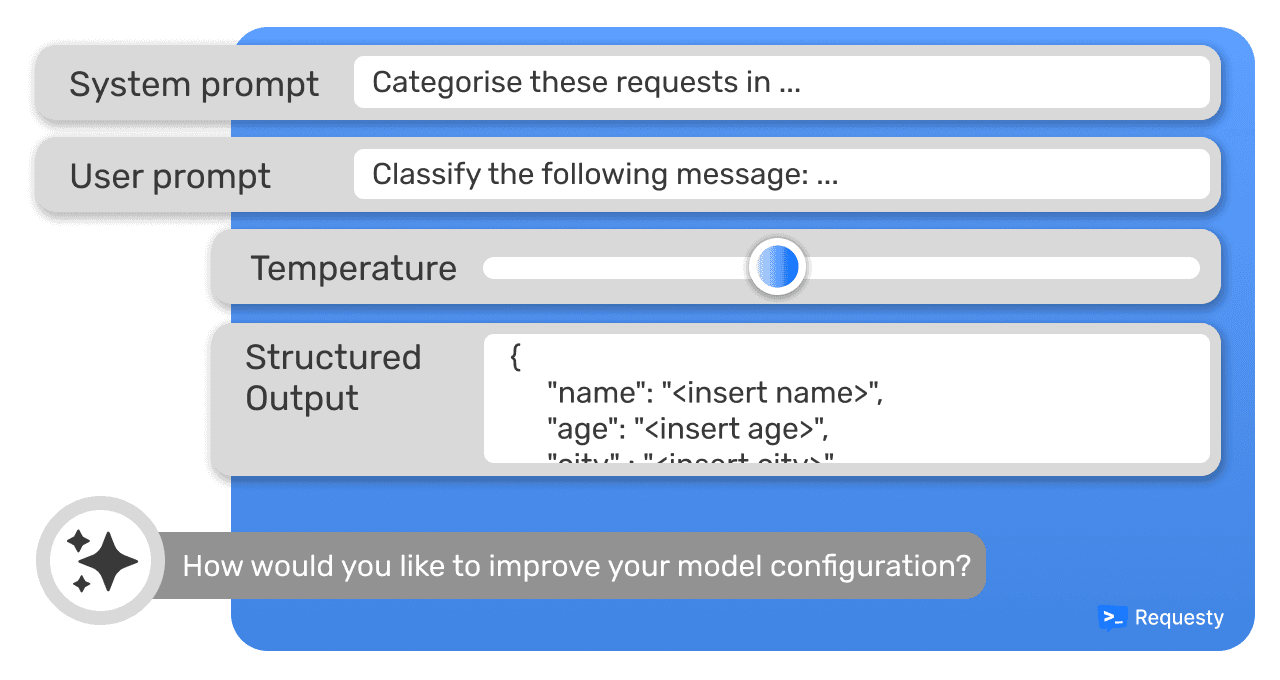

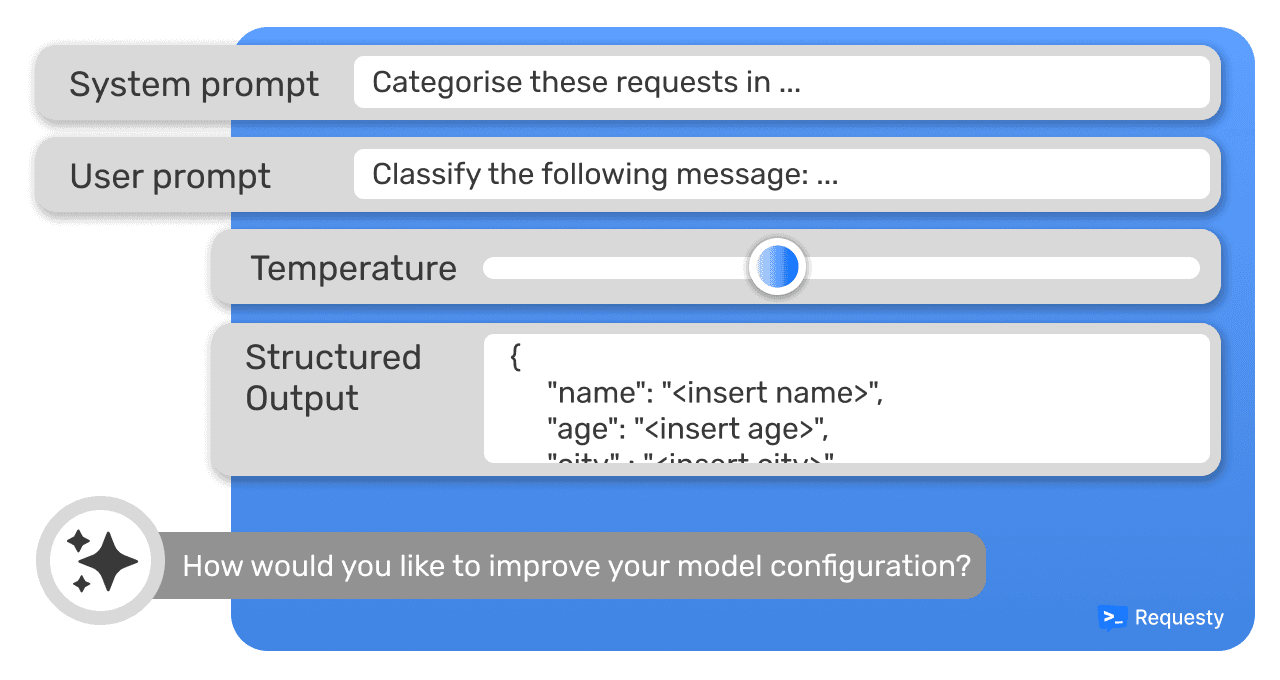

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

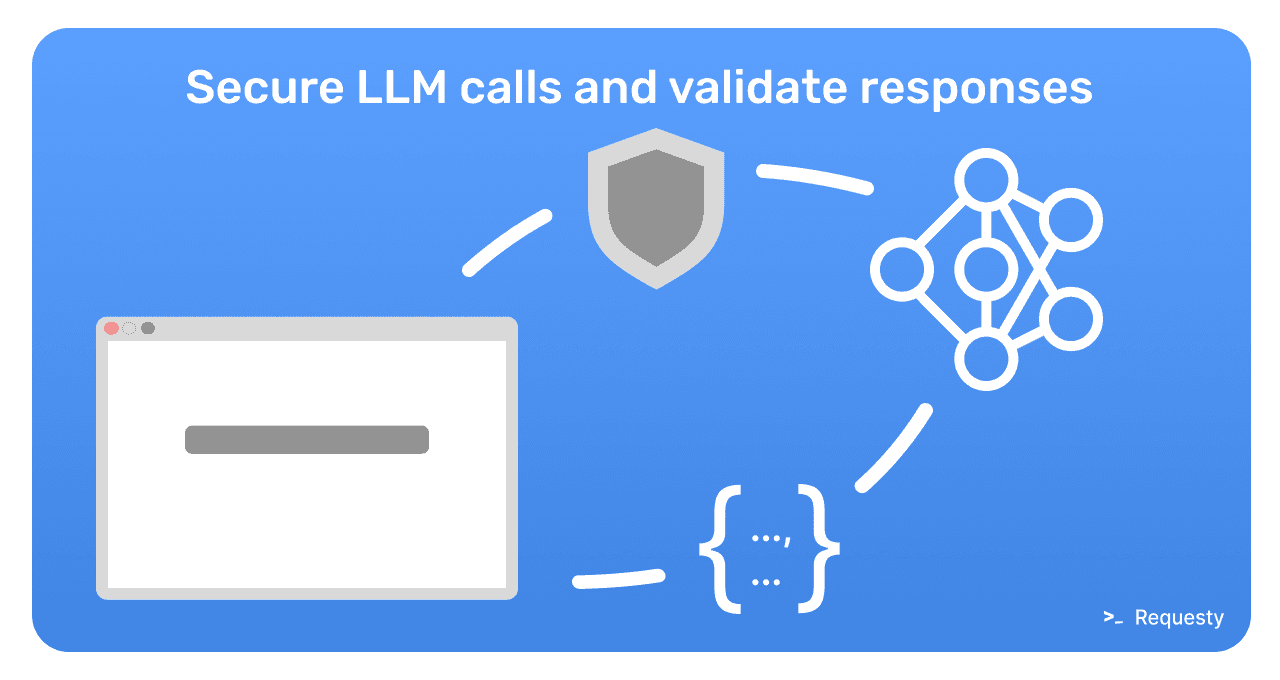

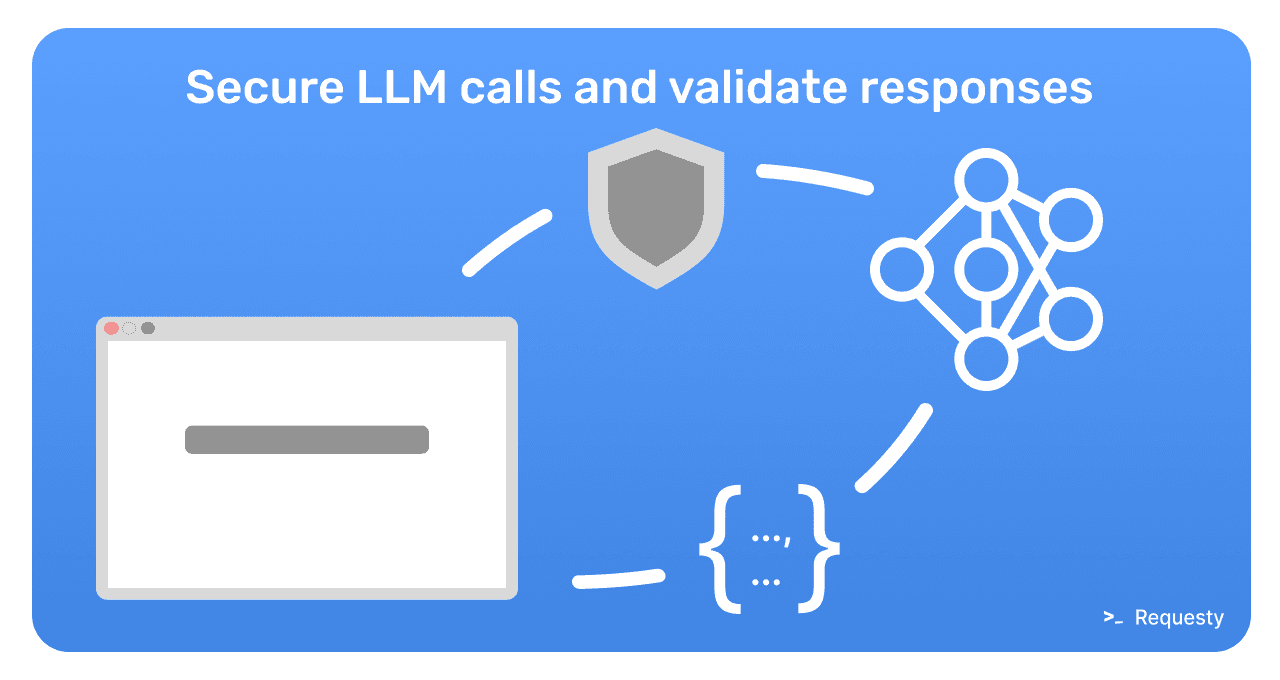

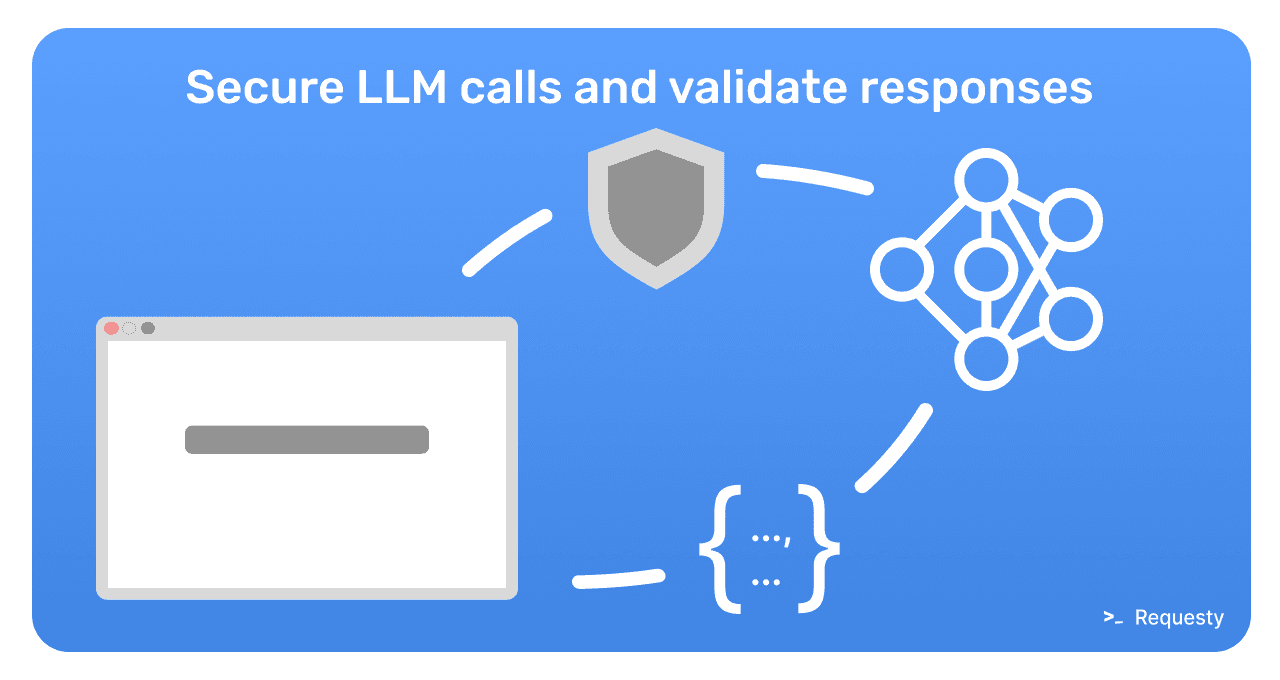

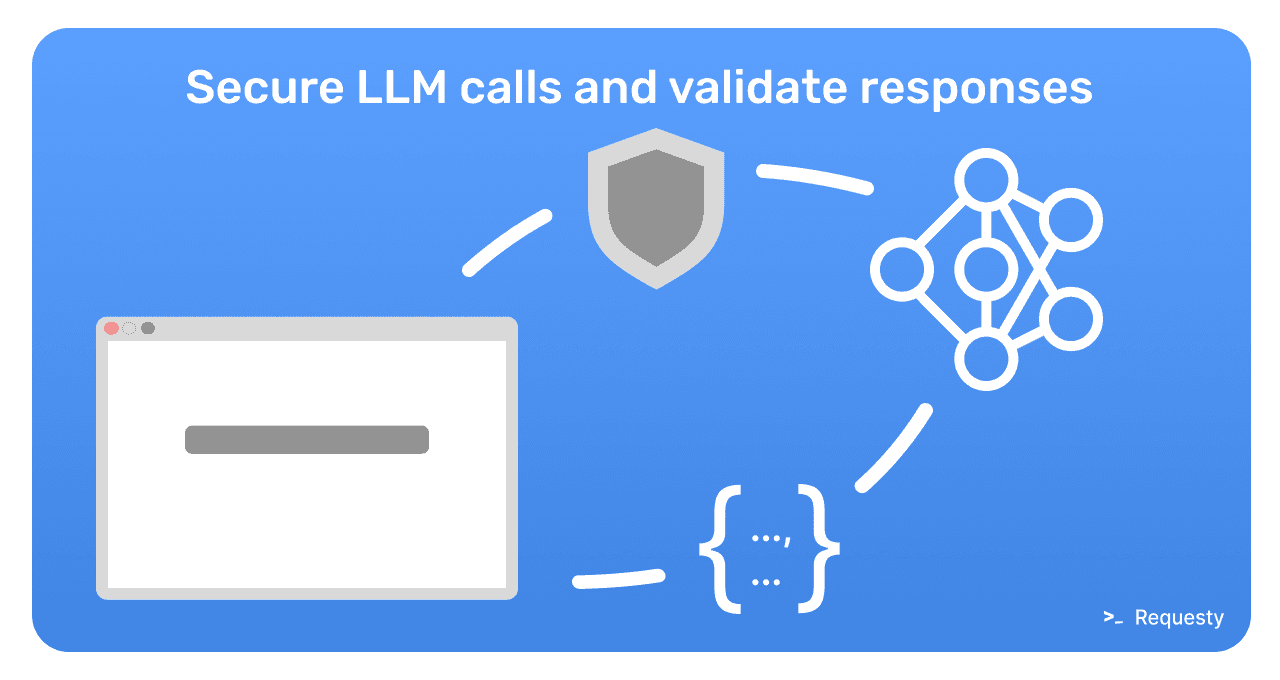

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

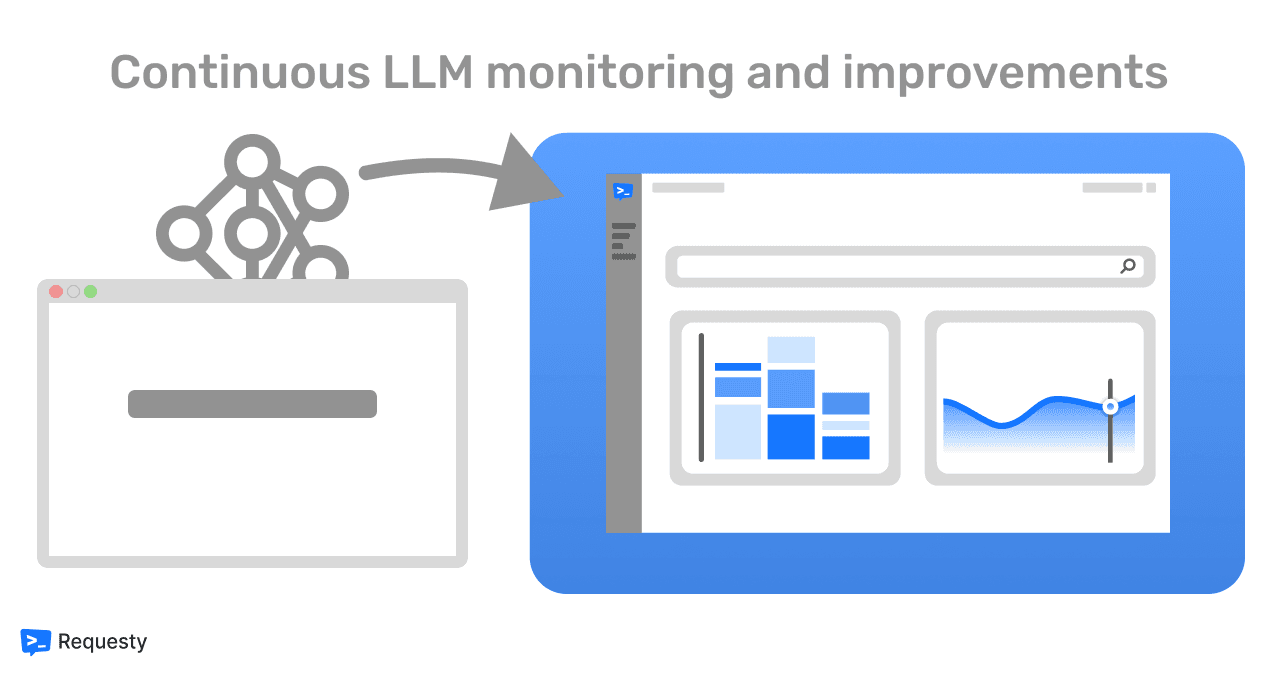

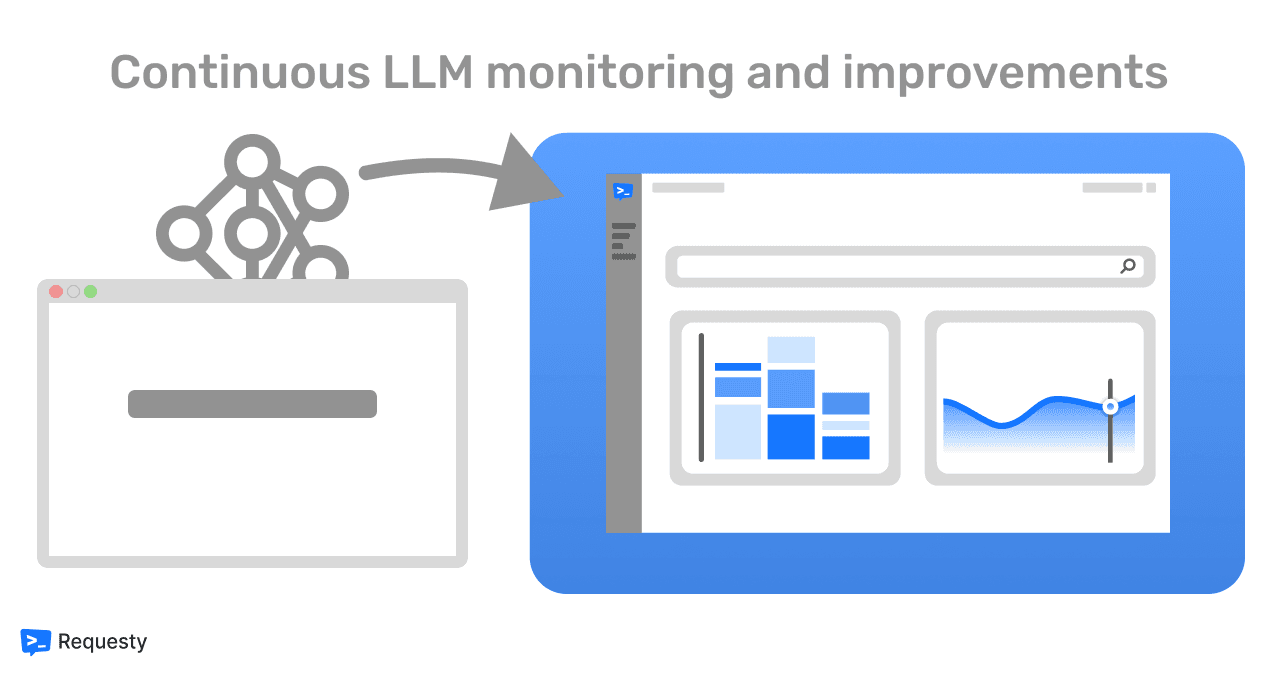

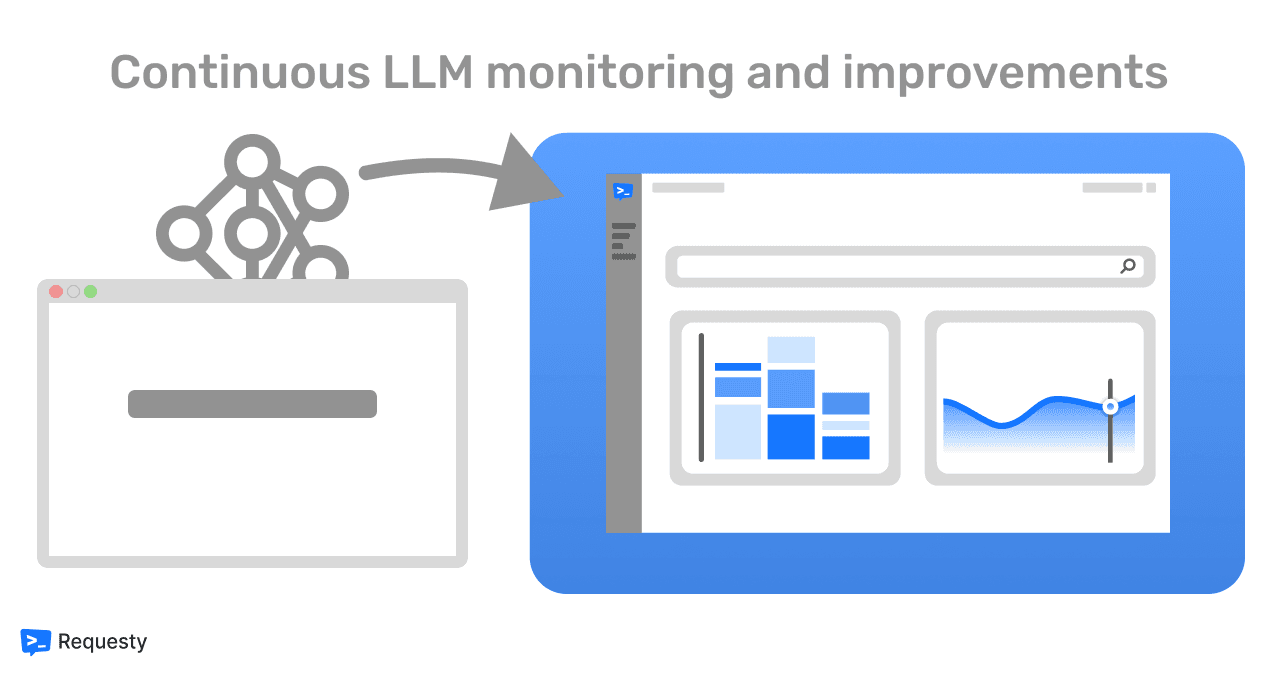

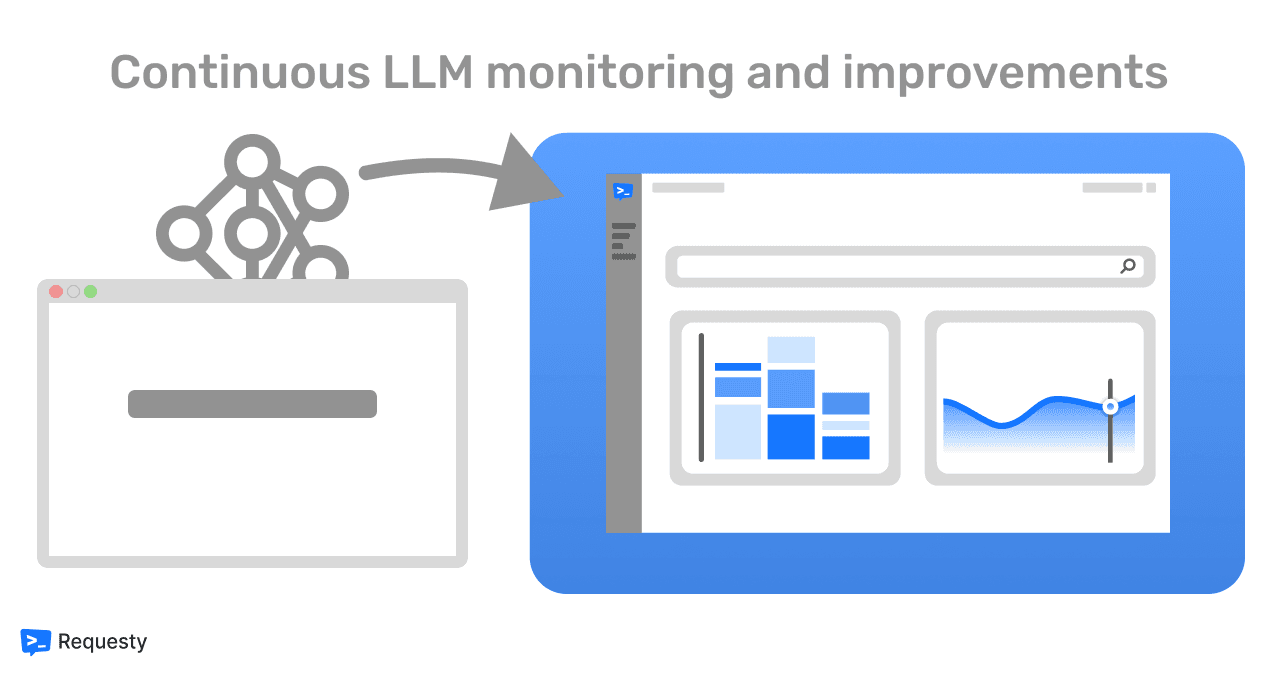

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

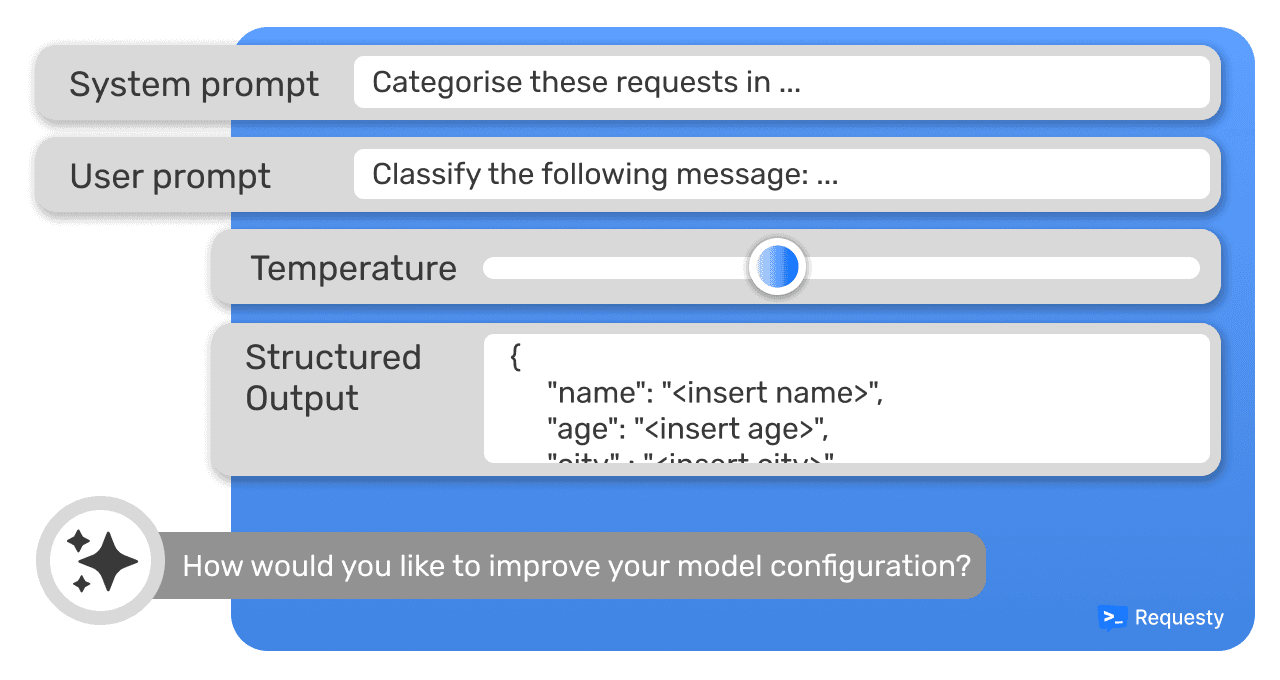

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

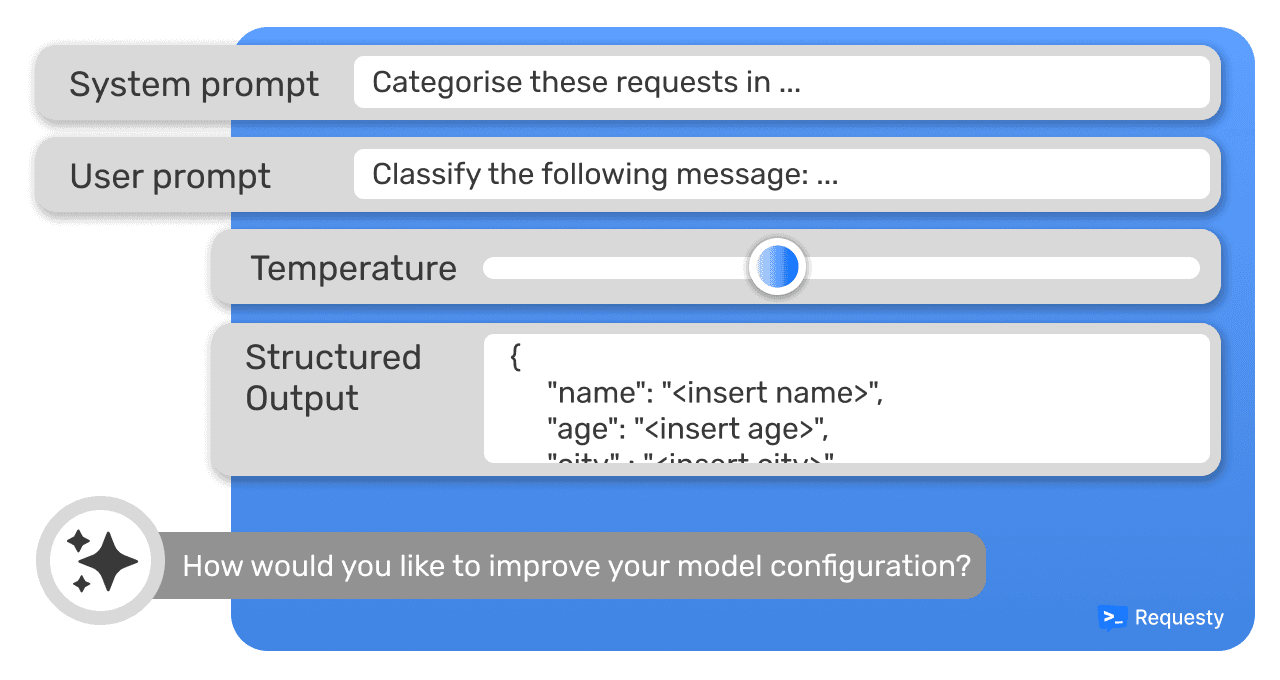

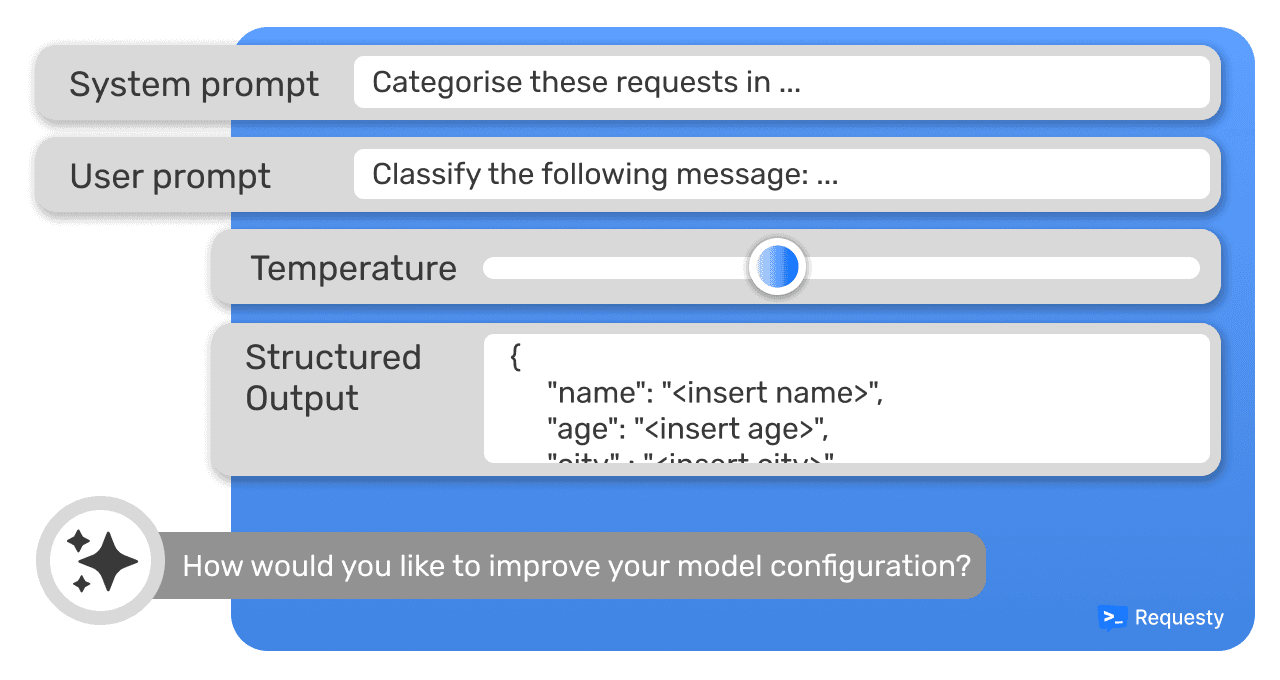

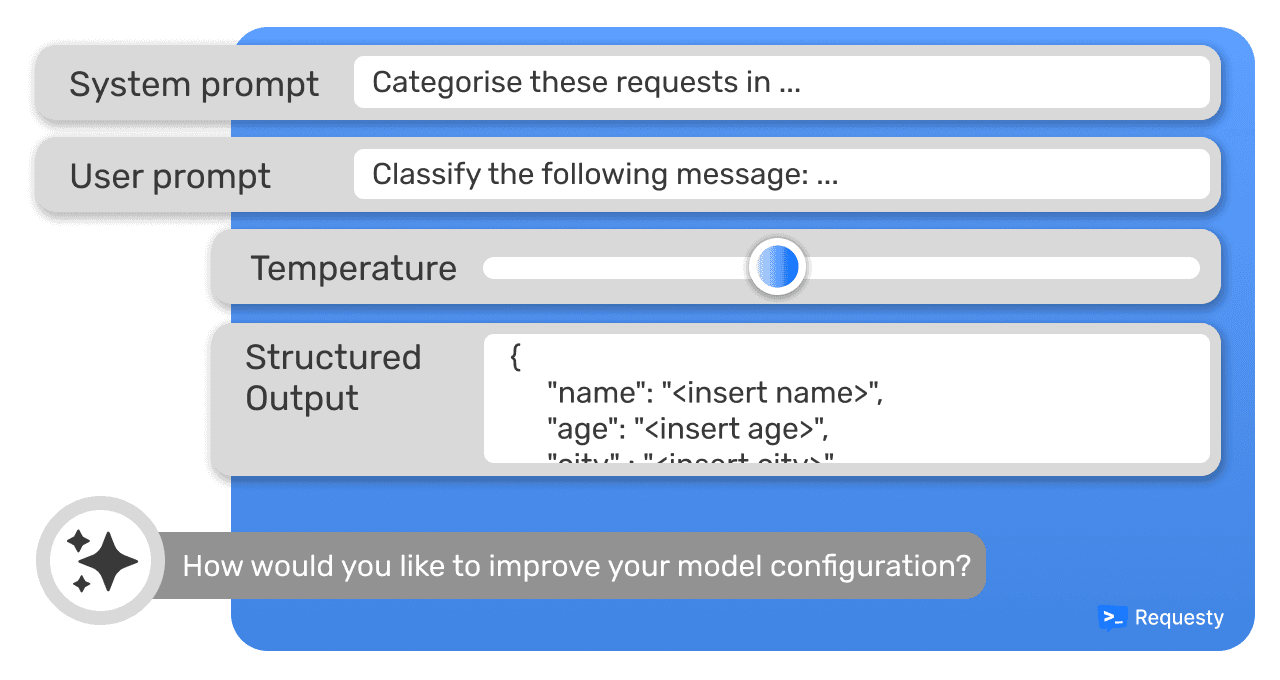

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

Quick prompt and model configuration

Set up parameterization and structured output requirements for your preferred model with ease. AI-assisted prompt performance comparison for your use case. Automated evaluation, to ensure reliable model performance and output

Deploy production LLM reliably

Don’t worry about the architecture to run your LLM in production. Performance audits, mitigate security risks, and scale inference with ease. Self-host models and inference architecture, keeping your data private and secure

Instant Insights, Dashboards and Reports

Direct access to usage data from high-level trends to single user interactions. Auto-categorisation, filtering based on user attributes and prompt data. Generate charts, compare user segments, build reports and share with your team within a couple clicks

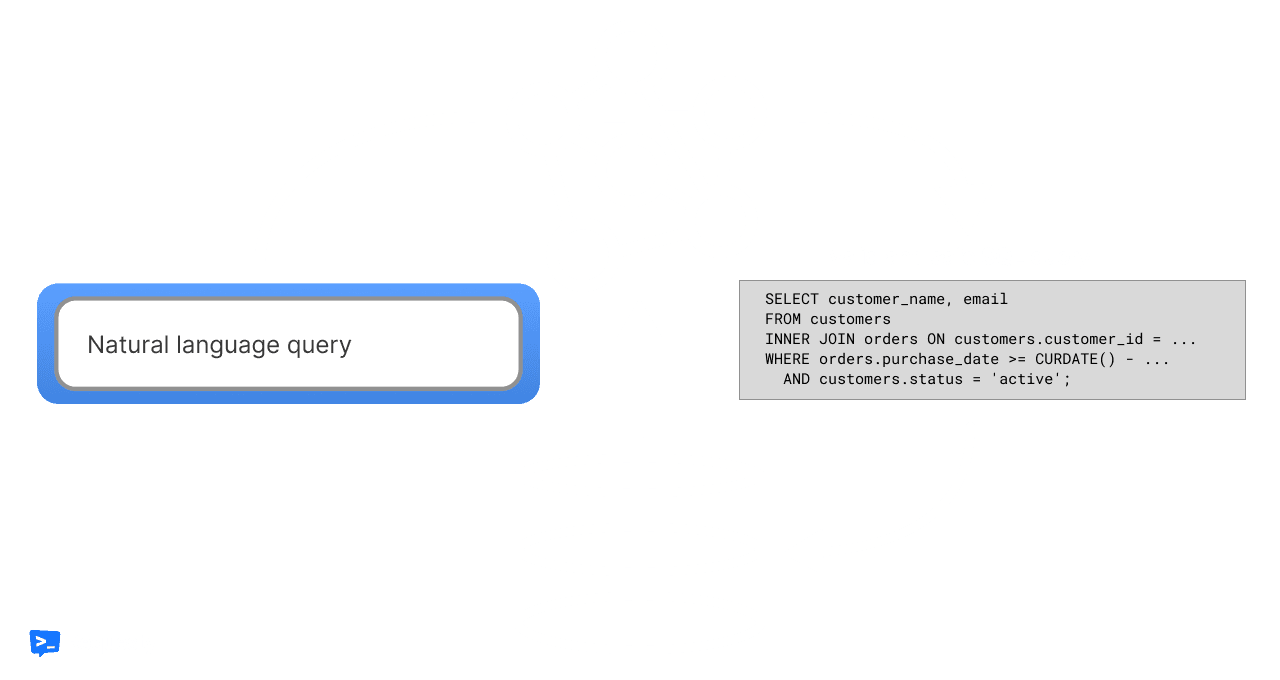

USE CASE

Text2SQL database querying

We brought this feature to production in 1 day, rather than the scheduled 3 months. Interaction with an SQL database through natural language prompts. From evaluating the performance of the output, to scaling the feature.

We've handled

interactions for our customers

interactions for our customers

Arthur M.

Head of Product @ Pony

Komal C.

CEO @ Zohr

Bilal R.

Head of Product @ Lumos

Tell us what you're building

Are you building an LLM-powered feature?

We’re happy to share best practices!

Speak with the founders